Article Directory

Generated Title: The Unseen Transaction: Decoding the Web's Hidden Currency

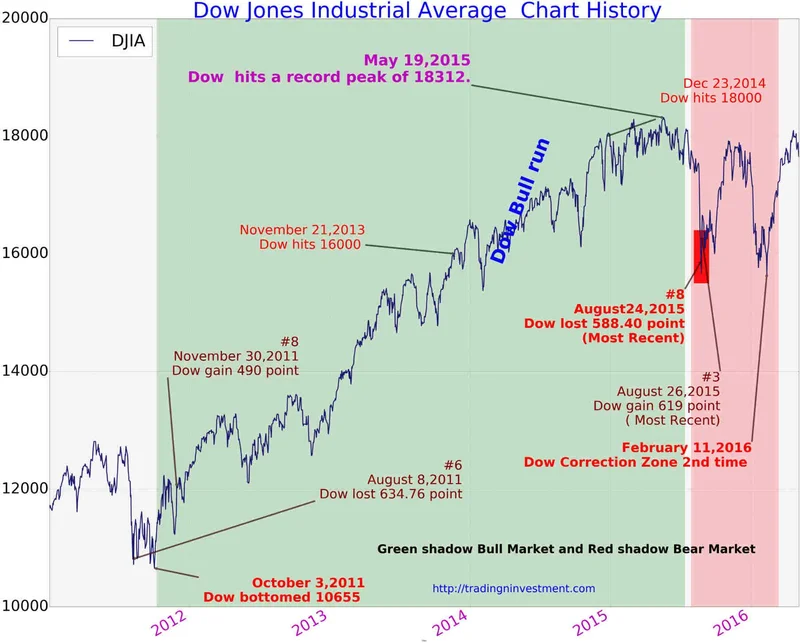

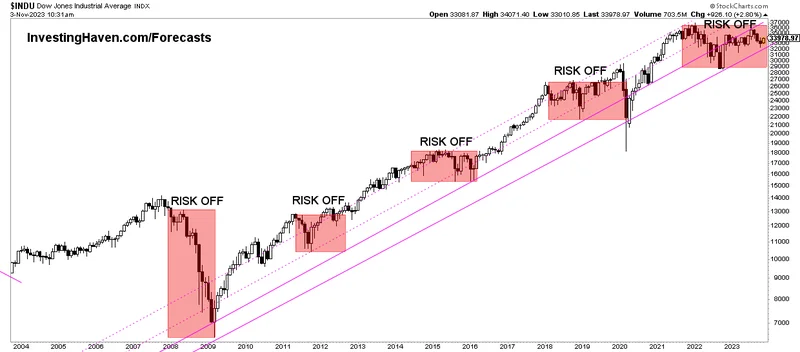

You open a link to check the dow jones stock markets today, and instead of a ticker, you hit a wall. A sterile white page, no branding, just cold, black text: "Access to this page has been denied." The reason? You’re suspected of being a bot. The system detected something anomalous—a disabled script, a blocked cookie—and its defenses kicked in. You’re presented with a cryptic reference ID, a digital fingerprint of your rejection.

Most people would just hit the back button, maybe clear their cache, and move on. But for an analyst, this isn't an error message. It's a glimpse of the machinery. It’s the brief moment the curtain is pulled back on the intricate, automated, and deeply transactional nature of the modern internet. You weren't denied access because you're a security threat; you were denied access because you failed to present the proper payment. And the currency, it turns out, is you.

The Architecture of Observation

When you successfully load a webpage—say, S&P 500 posts winning week, but Friday rally fizzles: Live updates from a major outlet like NBCUniversal—a far more complex transaction occurs. It’s outlined, in excruciating detail, in documents that almost no one reads. I’m talking about the Cookie Notice, a legal disclosure that doubles as a business model blueprint. It’s not just a formality; it’s the terms and conditions of the barter.

The document calmly explains the deployment of "Cookies," a benign term for a constellation of tracking technologies. They’re broken down into categories that read like a surveillance operative’s toolkit. "Strictly Necessary Cookies" are the cost of entry, the basic functions. But then the scope expands rapidly. "Information Storage and Access Cookies" allow them to identify your specific device. "Measurement and Analytics Cookies" watch how you behave, what you click, how long you linger. This is the part of the operation that I find genuinely puzzling from a user-value perspective. We've accepted that our every digital twitch can be collected, aggregated, and used to "improve the content," but what is the quantifiable measure of that improvement for the end-user? Is the trade—a free article about the u.s. stock market today for a permanent, cross-platform behavioral profile—a fair one?

The system is like a modern smart home. You get to live in it for "free," but the cost is that every light switch you flip, every show you watch, and every conversation you have is logged and analyzed by the home's manufacturer. They use this data to "personalize your experience" (serve you ads for the brand of coffee they saw on your counter) and "improve their services" (build a better, more efficient surveillance home for the next occupant). You can, in theory, go into the basement and start flipping the dozens of circuit breakers to turn off the sensors in the living room or the microphone in the kitchen, but the default setting is total observation.

This model gets even more intricate with "Ad Selection and Delivery Cookies." These trackers collect your browsing habits not just on one site, but across the web, building a detailed psychographic profile. They know your interests, your preferences, your patterns. This is why you search for a new set of tires and are then haunted by tire ads for weeks. The data collected can even be used for "Content Selection," meaning the news articles and videos you see are algorithmically chosen for you. It’s a feedback loop of immense power, shaping not just your consumer habits but potentially your worldview.

The Illusion of Control

The defenders of this model will point to the "COOKIE MANAGEMENT" section of the policy. They’ll argue that control is given to the user. On the surface, this appears true. The document provides links to browser settings, analytics provider opt-outs, and advertising alliance portals. It’s a gesture toward transparency.

But look closer. To truly opt out, a user must navigate a dizzying maze of settings across multiple browsers, devices, and third-party websites. The policy lists opt-out pages for Google, Facebook, Twitter, and Liveramp, adding the crucial caveat: "this is not an exhaustive list." The user is tasked with managing preferences on their laptop, their phone, their smart TV, and any other "connected devices." It’s not a single switch; it’s a hundred of them, scattered across a dozen different platforms, each with its own interface and terminology.

The complexity is staggering. The policy itself lists about a dozen—to be more exact, it links out to a dozen different management tools and policies—for the user to manage. This isn't a system designed for user empowerment; it's a system designed for legal compliance that relies on user fatigue. It presents the illusion of choice while architecting an environment where the default—total data collection—is the path of least resistance.

This raises a fundamental methodological question. If exercising a right requires a user to possess a high degree of technical literacy and the patience to navigate a fragmented, intentionally complex web of external websites, is it truly a right at all? At what point does complexity become a form of coercion? The "Access Denied" page is the system’s hard wall. The cookie consent banner is its soft one. One blocks the machine, the other welcomes the human, but both serve the same master: the transaction.

You Are the Asset, Not the Customer

Let’s be precise about what’s happening here. This isn't a simple trade of ads for content. It is the systematic, cross-platform construction of a behavioral asset—your digital identity—which is then monetized in perpetuity. The complexity of the opt-out process isn't a bug; it's the most critical feature of the entire model. It ensures a low opt-out rate, maximizing the data harvest. We aren't the customers of the "free" internet. We are the product being sold. And the cookie notice isn't a privacy policy; it's the factory's operating manual.